Deploying Python Lambda functions using Docker

By Florian Maas on October 9, 2022

Estimated Reading Time: 8 minutes

In the past, I have often seen Lambda functions that are written in Python being deployed by zipping the source code and the installed dependencies and then using the

fromAsset method. One drawback of this

approach, is that the resulting .zip file can only be 50MB's in size.

A workaround for this limitation is to use Lambda layers, which together still

"cannot exceed the unzipped deployment package size quota of 250 MB"[source].

However, I don't like this solution for various reasons:

- It opens up the gates to Python Dependency Hell.

- It becomes a complex exercise to recreate the Lambda's virtual environment locally

- The file size limit usually requires manual modification of the virtual environment before zipping It

Therefore, my preference goes out to deploying the Lambda functions using a Docker image. In this tutorial, I will elaborate on my preferred approach to deploy multiple Lambda functions using a single Docker image.

The source code for this tutorial can be found on GitHub.

Creating the project

To start, let's create a directory for our project:

mkdir aws-cdk-py-docker-lambdas

cd aws-cdk-py-docker-lambdas

Then, we create two directories: One for our CDK code, and one for our Python code.

mkdir cdk python

Part 1: Python

First, we will create the Python code for our Lambda functions. Note: It is recommended to use Python 3.9 for this tutorial. If you do not have that version installed locally

and you are unsure on how to manage multiple versions of Python locally, I recommend taking a look at pyenv.

In this tutorial we will use Poetry for managing the dependencies, but the changes required to make this tutorial work with another dependency manager are minimal.

To initiate the Python environment with Poetry, run:

ck python

poetry init

Navigate through the prompts, and then let's add pandas to our environment so we can verify that we can import

our installed packages in our Lambda functions later.

poetry add pandas

Then, install your virtual environment with

poetry install

This will also create our Poetry.lock file, which will guarantee that the dependencies we use locally will also be the ones used in our Dockerfile later.

Now, there are two things we should do: We have to write our Python functions, and we have to create a Dockerfile. Let's start with the first step.

To do so, create the following folder structure:

└── python

├── poetry.lock

├── pyproject.toml

└── src

├── __init__.py

├── lambda_1

│ ├── __init__.py

│ └── index.py

└── lambda_2

├── __init__.py

└── index.py

Where the contents of lambda_1/index.py are:

import json

def handler(event, context):

print('request: {}'.format(json.dumps(event)))

return {

'statusCode': 200,

'headers': {

'Content-Type': 'text/plain'

},

'body': 'Ran lambda_1 successfully!'

}

It would also be good to test that we can actually import and use pandas, so let's add the following contents to

lambda_2/index.py:

import json

import pandas as pd

def handler(event, context):

print('request: {}'.format(json.dumps(event)))

print(pd.Series([1, 3, 5, 6, 8]))

return {

'statusCode': 200,

'headers': {

'Content-Type': 'text/plain'

},

'body': 'Ran lambda_2 successfully!'

}

Now, let's create the Dockerfile. The contents of the Dockerfile should be as follows:

FROM public.ecr.aws/lambda/python:3.9-arm64

ENV POETRY_VERSION=1.1.13

# Install poetry

RUN pip install "poetry==$POETRY_VERSION"

# Copy only requirements to cache them in docker layer

WORKDIR ${LAMBDA_TASK_ROOT}

COPY poetry.lock pyproject.toml ${LAMBDA_TASK_ROOT}/

# Project initialization:

RUN poetry config virtualenvs.create false && poetry install --no-interaction --no-ansi --no-root

# Copy our Flask app to the Docker image

COPY src ${LAMBDA_TASK_ROOT}/

# Set the CMD to your handler (could also be done as a parameter override outside of the Dockerfile)

CMD [ "lambda_1.index.handler" ]

There's no rocket science here. We install Poetry, copy the files needed to create our environment,

install the environment, and then copy our Lambda functions. The last step sets the default CMD to

our first Lambda function, but this default can easily be overwritten when using the Docker image to

spin up a Docker container.

We can test our Dockerfile and Lambda functions by running the following commands:

docker build --platform linux/arm64 -t lambdas .

docker run -p 9000:8080 lambdas

and then in a second terminal session, run:

curl -XPOST "http://localhost:9000/2015-03-31/functions/function/invocations" -d '{}'

This should output the following to the console:

{

"statusCode": 200,

"headers": {"Content-Type": "text/plain"},

"body": "Ran lambda_1 successfully!"

}

Awesome! Our Lambda function is working. Now let's get to the second part of our project: The CDK code.

Part 2: CDK

For this section, please make sure you have the AWS CLI Installed and configured. Then, install CDK locally with

npm install -g aws-cdk

Now, let's navigate into the cdk directory we created earlier and instantiate our cdk project:

cd ../cdk #assuming we are still in the `python` subdirectory

cdk init --language typescript

Then, let's rename some of the files for clarity

mv bin/cdk.ts bin/cdk-lambda-python.ts

mv lib/cdk-stack.ts lib/cdk-lambda-python-stack.ts

and change the lines in package.json and cdk.json accordingly:

package.json

"cdk-lambda-python": "bin/cdk-lambda-python.ts"

ckd.json

"app": "npx ts-node --prefer-ts-exts bin/cdk-lambda-python.ts",

Then change the contents of bin/cdk-lambda-python.ts to

#!/usr/bin/env node

import 'source-map-support/register'

import * as cdk from 'aws-cdk-lib'

import { CdkLambdaPythonStack } from '../lib/cdk-lambda-python-stack'

const app = new cdk.App()

new CdkLambdaPythonStack(app, 'CdkLambdaPythonStack', {})

and let's change the contents of lib/cdk-lambda-python-stack.ts to:

import * as cdk from 'aws-cdk-lib'

import { Construct } from 'constructs'

import { aws_lambda as lambda } from 'aws-cdk-lib'

import * as path from 'path'

import { Architecture } from 'aws-cdk-lib/aws-lambda'

export class CdkLambdaPythonStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props)

new lambda.DockerImageFunction(this, 'LambdaFunction_1', {

code: lambda.DockerImageCode.fromImageAsset(

path.join(__dirname, '../../python'),

{

cmd: ['lambda_1.index.handler'],

}

),

architecture: Architecture.ARM_64,

})

new lambda.DockerImageFunction(this, 'LambdaFunction_2', {

code: lambda.DockerImageCode.fromImageAsset(

path.join(__dirname, '../../python'),

{

cmd: ['lambda_2.index.handler'],

}

),

architecture: Architecture.ARM_64,

})

}

}

The code is quite self-explanatory: We create the Lambda functions from the Docker image we created earlier, and

we overwrite the cmd argument to specify which Lambda function we want to be called.

Now, it's time to actually deploy our Stack! Before deploying the stack, let's run the following command:

cdk bootstrap

cdk synth

The first command will make sure required resources to perform deployments are defined in an AWS CloudFormation stack, called the bootstrap stack[source]. The second command produces a CloudFormation template[source].

With that out of the way, we can now deploy our Lambda functions! To do so, run the following command:

cdk deploy

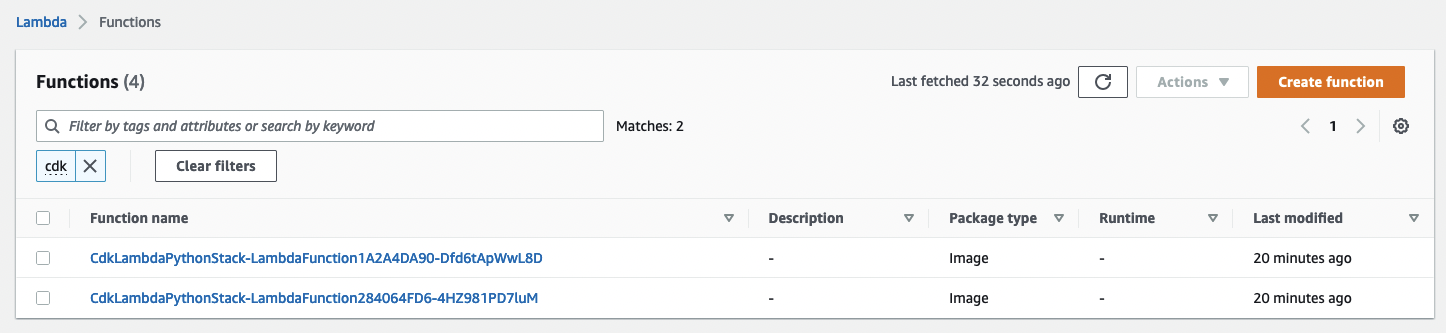

If this runs successfully, we can see our Lambdas by navigating to AWS Lambda in the AWS Console:

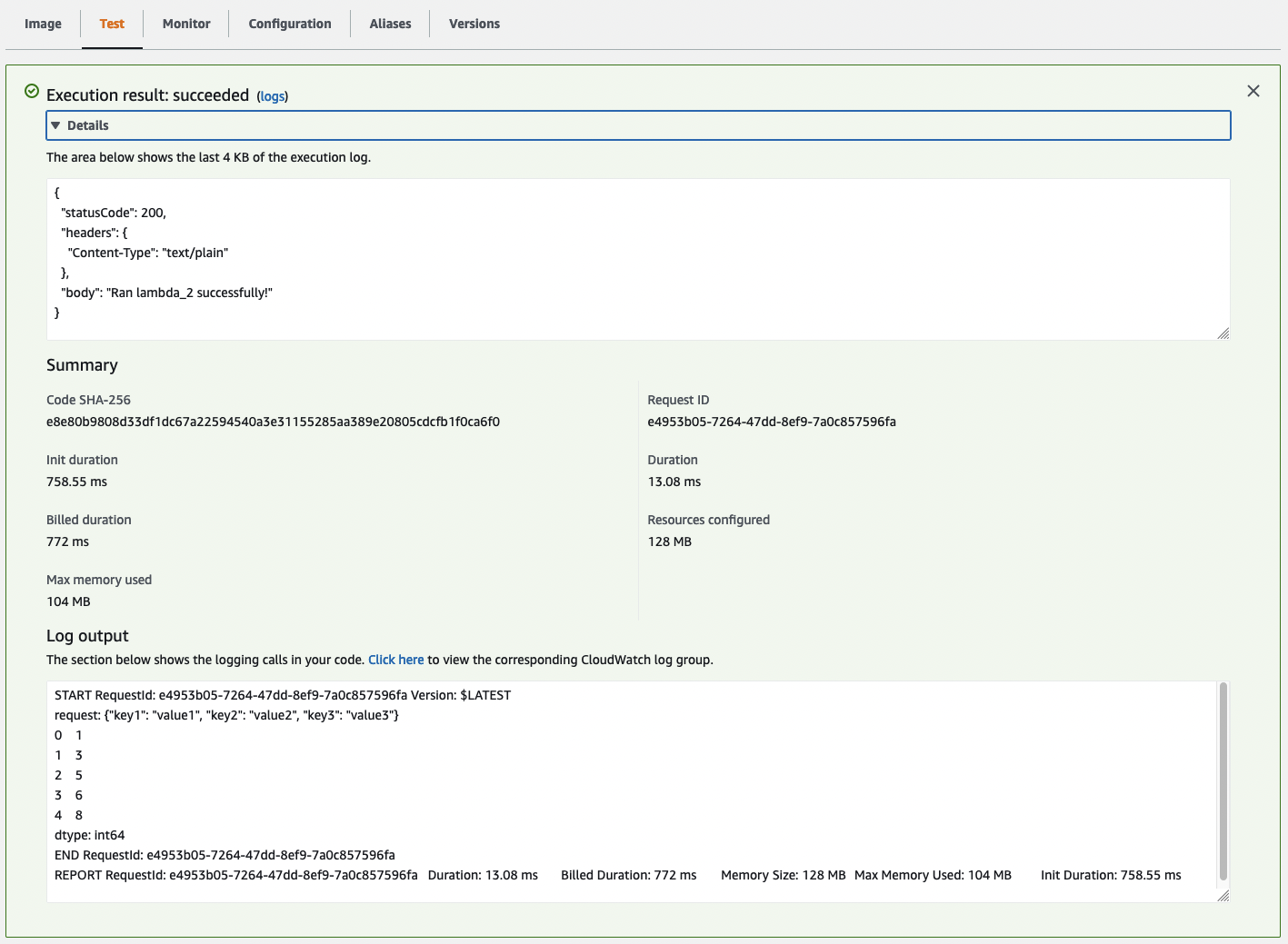

To test our Lambda functions, let's open the Lambda function containing the text LambdaFunction2 (Maybe we should not use a number as the last

character of our Lambda's name next time), navigate to Test, and click the orange button with the text Test. We should now see the following output:

Awesome! Seems like we were successful in creating our Lambda functions.

I hope this tutorial was useful to you! If you have any feedback, comments, or questions, Please don't hesitate to reach out.

Florian